How Private Investigations Expose AI-Generated Evidences

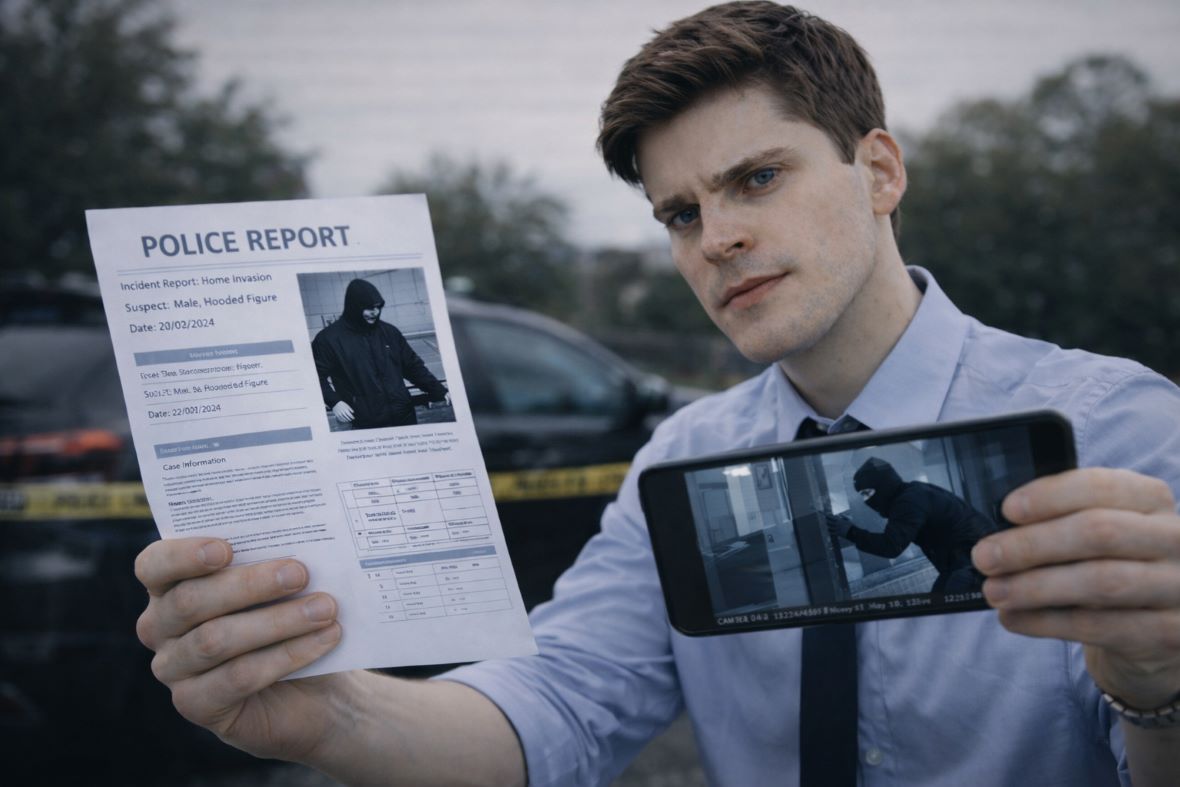

The rise of sophisticated technology has fundamentally shifted how we view digital proof in legal and personal matters. You might think that a photo or a voice recording is undeniable proof of a specific event, but the emergence of AI-Generated Evidence has turned this logic on its head. In the past, seeing was believing; today, seeing is often the first step in a much longer verification process.

As artificial intelligence becomes more capable of creating hyper-realistic media, the role of private investigations has expanded from traditional surveillance to specialised digital forensics. Distinguishing between a genuine moment and a computer-generated fabrication requires a blend of technical skill and old-fashioned detective work. If you find yourself facing digital evidence that doesn’t feel right, understanding how these fabrications work is your first line of defence.

Why Deepfakes and Synthetic Media Are Changing Legal Cases

Digital deception is no longer limited to big-budget movies or high-stakes international espionage. You are now seeing fake photos, manipulated audio, and synthetic videos appearing in local courtrooms, workplace disputes, and even family law cases. A person might present a “recording” of a conversation that never happened or a “photo” placing someone at a location they never visited. These digital lies, often called deepfakes, are designed to deceive the human eye and ear by mimicking the likeness and voice of real people with startling accuracy.

For those involved in legal disputes, this shift is alarming. Because the tools to create synthetic media are widely available, almost anyone can produce a convincing fake with a bit of patience. This world of “alternative facts” means private investigators must now place greater emphasis on digital expertise. An investigator can’t just look at a video; they have to verify its origin, its journey through various servers, and its internal structure.

The danger lies in the emotional weight that visual and audio evidence carries. A jury or a judge is naturally inclined to trust what they see and hear. When AI-Generated Evidence is introduced, it can unfairly sway a case before the other side even has a chance to object. Private investigators Sydney act as a shield against this, using their skills to prove that what appears to be a mountain of evidence is, in fact, a carefully crafted digital illusion. They understand that in the modern era, seeing is no longer enough to believe, and verifying proof is essential for your confidence in legal matters.

The Common Signs of Tampered Digital Files

While artificial intelligence is brilliant, it’s not perfect. If you look closely at AI-generated evidence, you can often find “glitches” that reveal its synthetic nature. These mistakes happen because the AI is predicting what a scene should look like rather than recording what is actually there. Recognising these signs can help you feel more in control of digital evidence and less vulnerable to deception.

- Inconsistent Lighting and Shadows: AI often struggles with how light interacts with complex surfaces. Look for shadows that point the wrong way or reflections in eyes and glasses that don’t match the surrounding environment.

- Warped Backgrounds: Often, the person in the centre of an AI image looks excellent, but the background is a mess. Look for “breathing” walls in videos or blurry, distorted objects in the distance.

- Robotic Speech Patterns: In audio fakes, the voice might sound perfect, but the pacing is off. Listen for a lack of natural breathing, strange pronunciations of common words, or a flat emotional tone.

- Physical Anomalies: AI famously struggles with human anatomy. Extra fingers, missing earlobes, or jewellery that seems to blend into the skin are dead giveaways of a fake.

How Experts Verify Local and Digital Records

When a case moves into the professional realm, investigators move beyond visual inspections and look at the “bones” of a digital file. This process involves examining metadata, which is the hidden data attached to every digital file. Metadata tells a story about when a photo was taken, what device was used, and if the file was opened in editing software like Photoshop. If a photo claims to be from a specific date but the metadata shows it was created by an AI generator a week ago, the opposition’s case begins to crumble. Relying on expert verification can give you peace of mind about the authenticity of the evidence.

Private investigators Melbourne use specialised forensic software to analyse file headers and hex data. They look for signs of “re-encoding,” which happens when a file is saved multiple times or altered. If a video has been processed by an AI tool, it usually leaves a digital “fingerprint” that isn’t visible to the naked eye but is evident to a computer scan.

Beyond the tech, investigators use human logic to debunk fakes. They might cross-reference a suspicious photo with weather reports from that day or check if the shadows in the image align with the sun’s position at that specific time and location. By combining high-tech forensic tools with traditional fact-checking, they can build a timeline that proves whether the evidence is authentic or a fabrication. This multi-layered approach ensures that digital proof stands up—or falls—on its own merits.

Protecting Yourself from False Digital Accusations

If you believe you are the victim of framed digital evidence, you must act immediately. The longer you wait, the harder it becomes to prove your innocence. The first step is to preserve your own digital footprint. If someone claims you sent a compromising video or were at a particular place, your own phone and computer become your best witnesses.

Don’t delete anything, even if it feels irrelevant. Keep your original devices safe and avoid running “cleaning” software that might overwrite necessary system logs. These logs can prove your device was elsewhere or that you never accessed the software used to create the fake. Reaching out to a professional at the first sign of trouble is crucial. They can provide the surveillance services Australia or digital analysis needed to get ahead of the false narrative and protect your reputation in court.

Unmasking AI Evidence

The world of AI-Generated Evidence is moving fast, and the stakes for legal and personal truth have never been higher. As these tools become more accessible, the danger of being targeted by digital fabrications grows. You cannot rely solely on your eyes to determine what is real in a digital case; you need a structured verification approach. Engaging in private investigations gives you access to the tools and logic required to debunk sophisticated fakes. Whether you are dealing with a personal dispute or a corporate matter, the truth is worth the effort of a professional search. Don’t leave your future to chance or guess about the authenticity of a file. Hiring a professional is the only way to ensure that digital lies don’t become your reality. Reach out for expert help today to protect your integrity.

Answers to Your Questions About Digital Proof

Can a regular person spot AI evidence?

Sometimes, yes. If the AI is low quality, you might notice flickering around the face or strange artifacts in the background. However, high-quality “deepfakes” are often impossible to spot with the naked eye and require professional forensic tools to detect.

Is AI-Generated Evidence allowed in court?

Generally, no, if it’s being presented as real. However, the challenge is that it often looks real enough to be admitted before it’s challenged. Once an investigator proves a file has been manipulated, the judge will typically throw it out and may even penalise the person who submitted it.

How do private investigations help prove a video is fake?

Investigators use a combination of metadata analysis, shadow/light verification, and source tracing. They look for the original file “fingerprint” and compare it against known AI-generation patterns to show the court exactly where and how the video was altered.